Fundamentals Of Iterative Theory

June 18, 2012 Leave a comment

Iterative Theory is a study of trying to ‘solve a problem all in one go’ versus trying to ‘solve it by splitting it up into parts’.

Iterative theory is not about comparing different project management or problem solving methodologies. At it’s most basic Iterative Theory does not care what methodology you are using – but it does care how you apply that methodology.

This post is an introduction in applying Iterative Theory to Structured Stochastic Problems. In later posts we will develop an argument to show that there is an unavoidable additional cost incurred in removing the ambiguity inherent in a project. We will then see how to minimise that extra cost through the use of Iterations.

Abstract Version

As a first step consider splitting a project into several parts. Clearly if it cannot be split up then you have no opportunity to take an iterative approach. But let’s assume we can split it up into parts. What does this mean? It means that for each part we can run our methodology on that part alone and then when that part is done move onto the next part and repeat the process.

So a fundamental of Iterative Theory is that we must have a problem that we can split into parts.

Iterative Theory assumes some level of ignorance or uncertainty on the side of the problem solver. If there is no uncertainty – in other words I can prove that my proposed solution is correct – then there will be no benefit in splitting it into iterations. There is a subtlety here in that I may well be able to prove that my proposed solution exactly satisfies the problem as it has been given to me – but that does not help if there is some uncertainty regarding the accuracy of the initial statement of the problem.

So a fundamental of Iterative Theory is that there must be some uncertainty in the correctness of the solution.

Iterative Theory also requires an ‘Oracle’ to tell us if we have got it right. The Oracle represents the external source of truth regarding the solution. Only through presentation of a real solution can we know for sure that we have got it right. This is closely related to the concept of parts; what we really mean by being able to split a project into parts is that our Oracle can establish the correctness of the parts as they come.

So a fundamental of Iterative Theory is that there is some Oracle that can tell us if each part of the solution is correct.

Iterative Theory also requires that there is some connection between the parts of the problem. The connection manifests itself in the way that feedback from earlier parts of the problem alters the possibilities on later parts of the problem. If there is no connection between the different parts of the problem then applying iterative theory will not help.

So a fundamental of Iterative Theory is that the parts of the problem are interconnected.

Iterative Theory is about the impact of using iterations on the efficiency of a project or problem solving exercise. This links back to the role of the Oracle in that if the Oracle tells us we have got it wrong then there must be some form of penalty. If we can try as many solutions as we like with no penalty then it does not matter if we have a good approach or bad approach.

So a fundamental of Iterative Theory is that there is a penalty for getting it wrong.

Taking all of these fundamentals together we can say that Iterative Theory is about ‘Solving Stochastic Structured Problems Efficiently’. Lets break this definition down and look at each part individually:

- A Structured Problem is one where there are many individual parts to it and they depend upon each other.

- To know that we have Solved a part of the problem we need an Oracle who can provide feedback as we go along.

- Stochastic means that there must be an element of uncertainty regarding the possible solutions for the individual parts.

- And Efficiently means that there is an incentive to find a cost effective approach to solving the problem.

I will use the abbreviation ‘SSP’ for a Stochastic Structured Problem.

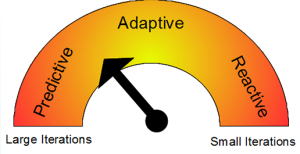

As we will see when it comes to project management you have a choice to treat the project as an SSP or not irrespective of the nature of the problem itself. What I mean by this is that you can easily mismatch your approach to the problem that you have. Getting this wrong ie adopting a predictive approach can lead to significant inefficiency.

Solving a problem that is an SSP is a pretty sophisticated challenge. Before getting into that we will be starting with simpler types of problems according to the following problem classification scheme:

-

Simple

Structured

Deterministic (Fixed) Simple Deterministic

Structured Deterministic

Stochastic (Random) Simple Stochastic

Structured Stochastic

In studying the decision making process we will work our way around this matrix starting from the top left and working to the bottom right. Having already spent time on the Structured Stochastic case lets have a quick look at the other boxes.

A Simple Deterministic problem is one where each decision can be made in isolation ie each decision is independent of any of the others. Moreover it is completely deterministic in that whatever choice we make will have a given pre-determined outcome. Problems like this are amenable to a simple approach at finding a solution; if each choice has a fixed consequence we just need to consider each choice in turn and then choose the best one.

A Simple Stochastic problem is one where we can still make each decision in isolation. However there is an element of chance involved in that for each decision we make there are a range of possible outcomes. Problems like this are solved by considering the probabilities of the various outcomes. By analysing the most and least likely outcomes of our decision we can make the ‘best’ choice. This type of problem is the staple of Decision Theory that we will examine in more detail later.

A Structured Deterministic problem is one where there is no uncertainty but there are a lot of interdependent decisions to be made. The more decisions there are to be made the harder it gets to solve such a problem because there are more and more possible combinations to consider. The lack of randomness is a blessing but even so such problems are usually very hard to solve in practice. The Travelling Salesman problem is a classic example of this type of problem.

And finally we are back to Stochastic Structured problems. These types of problems are naturally the most difficult to solve and their analysis is a major part of Iterative Theory. Most real world problems are of this type.

Practical Examples

Now lets look at some examples that will (hopefully!) help clarify all of this.

| Playing Chess. This problem is made up of many parts – the moves that will be made. Also the moves you make depend upon each other. The element of chance comes from the moves your opponent makes. Some moves are good and some are bad. Hence (in practical terms) this is an SSP. In a later post we will consider the impact of your opponents moves – that takes us into Game Theory– and how the goal of our opponent affects our strategy. |

|

| Betting on a horse race. This is not an SSP as we are only making a single decision. The only ‘part’ of the problem is which horse we bet on. The factors affecting the outcome of the race may well be complex but because we are only making one decision it is not an SSP. |

| Playing Roulette. This is not an SSP simply because each decision is independent of the one before. The lack of dependency turns this into a series of simple problems. |

|

| Playing a hole of Golf. If we say that the decisions to be made are which club to use to take each shot then this is an SSP. The best club to use clearly depends upon the somewhat random (especially if you are me) result of the previous shot. |

| Decimal expansion of Pi. While it may in fact be quite difficult to work out one by one what the digits of Pi are there certainly exist algorithms to do so. There is no element of randomness involved. Therefore this is not an SSP. |

|

General Themes

It is possible for a problem to become Structured if we have to repeat it several times – provided something can be learnt from earlier outcomes to inform later ones. For instance, taking the Horse Racing example, if we are going to make several bets we might start to learn things from earlier races that would alter our decisions on later ones. Maybe a particular jockey is having a good day. Or horses starting on the outside are consistently doing worse than expected. However if we take the Roulette example – assuming it is perfectly fair – then there is nothing to be gained from earlier spins to inform later ones.

Simple does not refer to the potential difficulty in making up your mind or taking all the variables into account but to the fact that there is no dependency between decisions. For instance in the Chess example the decision for any one particular move is Simple. It may be that to work out the best move would defeat the most powerful computer in the world but without the chance to take advantage of feedback this single move is not a Stochastic Structured Problem.

Another important theme is feedback. For a problem to be Structured there must be feedback after each decision that can be used to inform subsequent decisions. In the Golf example the feedback comes from where the ball ends up after each shot. If you had to decide which club to use for each shot up front then the problem would not be Structured. While you can see the feedback after each shot you are not allowed to act upon it. The approach taken makes the problem into a simple one with only a single decision being made up front.

In the Roulette example while you get feedback after each bet it is useless to you as it does not provide any information regarding future bets. It is important that the feedback comes from an external source or ‘Oracle’. If you can work out what the feedback will be for your decision before you make it then the problem is not a Structured one. In the π example while the actual value of the next digit might seem random you know from your algorithm that it will be correct. So the problem is not an SSP.

Feedback is required to resolve the uncertainty. In the Journey example the uncertainty is provided by the vagaries of the transport system. In the Chess example it is caused by the potential responses of your opponent. This is a sliding scale; you might argue that in the Building example there is very little uncertainty hence it is ‘only just’ a Stochastic Structured Problem.

There is an element of subjectivity in the classification of a problem. It may be that a Structured Problem for one person is not structured for another. It will depend upon your level of knowledge which will affect how you Frame the problem.

We have five factors to consider when classifying a problem as being an interesting Stochastic Structured Problem:

- Is there a clearly defined goal or way of deciding better or worse outcomes?

- Is there a single decision to be made or many?

- Is there some uncertainty in the outcomes given your decisions?

- Is there an interdependency between the outcomes of each decision?

- Is there any opportunity for useful feedback before reaching the goal?

If a problem satisfies these five requirements then it is a Stochastic Structured Problem and we can apply Iterative theory.

Virtually all real life projects satisfy these requirements.